AI System Disclosures

In the words of one commenter, “one of the greatest barriers to AI accountability is the lack of a standard accountability reporting framework.”91 As the NIST AI RMF proposes, AI system developers and deployers should push out more information about (1) the AI system itself, including the training data and model, and (2) about AI system use, including the fact of its use, adverse incident reporting, and outputs.92 Some information should be shared with the general public, while sensitive information might be disclosed only to groups trusted to ensure the necessary safeguards are in place, including government.

One commenter stated that “[i]f adopted across the industry, transparency reports would be a helpful mechanism for recording the maturing practice of responsible AI and charting cross-industry progress.”93 The EU is requiring transparency reports for large digital platforms.94 While transparency is critical in the AI context, non-standard disclosure at the discloser’s discretion is less useful as an accountability input than standard, regular disclosure.95

A family of informational artifacts – including “datasheets,” “ model cards,” and “system cards” – can be used to provide structured disclosures about AI models and related data.

Datasheets (also referred to as data cards, dataset sheets, data statements, or data set nutrition labels)96 provide salient information about the data on which the AI model was trained, including the “motivation, composition, collection process, [and] recommended uses” of the dataset.97 Several commenters recommended that AI system developers produce datasheets.98

Model cards disclose information about the performance and context of a model, including:99

- Basic information;

- On-label (intended) and off-label (not intended, but predictable) use cases;

- Model performance measurements in terms of the relevant metrics depending on various factors, including the affected group, instrumentation, and deployment environment;

- Descriptions of training and evaluation data

- Ethical considerations, caveats, and recommendations

System cards are used to make disclosures about how entire AI systems, often composed of a series of models working together, perform a specific task.100 A system card can show step-by-step how the system processes actual input, for example to compute a ranking or make a prediction. Proponents state that, in addition to the disclosures about individual models set forth in model cards, system cards are intended to consider factors including deployment contexts and real-world interactions.101

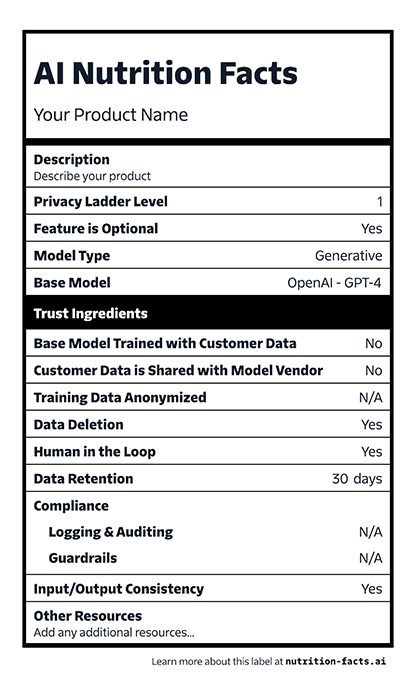

These artifacts might be formatted in the form of a “nutritional label,” which would present standardized information in an analogous format to the “Nutrition Facts” label mandated by the FDA. Twilio’s “AI Nutrition Facts” project shows what a label might look like in the AI context, pictured on the right.102

Model cards and system cards are often accompanied by lengthier technical reports describing the training and capabilities of the system.103

Many AI system developers have begun voluntarily releasing these artifacts.104 The authors of such artifacts often state that they are written to conform to the recommendations in the same paper by Margaret Mitchell,105 which proposes a list of model card sections and details to consider providing in each one.106 However, the actual instantiations of these artifacts vary significantly in breadth and depth of content. For instance:

- The model card annexed to the technical paper accompanying Google’s PaLM-2, which is used by the Bard chatbot, discusses intended uses and known limitations. However, the card lacks detail about the training data used, and no artifact was released for the Bard chat service as of this writing.107

- Meta’s model card for LLaMA contained details about the training data used, including specific breakdowns by source (e.g., 67% from CCNet; 4.5% from GitHub).108 However, Meta’s LLaMA 2 model card contained considerably less detail, noting only that it was trained on a “new mix of data from publicly available sources, which does not include data from Meta’s products or services” without describing specific sources of data.109

- OpenAI provided a technical report for GPT-4 that – beyond noting that GPT-4 was a “Transformer-style model pre-trained to predict the next token in a document, using both publicly available data (such as internet data) and data licensed from third-party providers” – declined to provide “further details about the architecture (including model size), hardware, training compute, dataset construction, training method, or similar.”110

- By contrast, BLOOMZ, a large language model trained by the BigScience project, is accompanied by a concise model card describing use, limitations, and training, as well as a detailed dataset card describing the specific training data sources and a technical paper describing the finetuning method.111

The above illustrates differences in approach that may or may not be justified by the underlying system. These differences frustrate meaningful comparison of different models or systems. The differences also make it difficult to compare the adequacy of the artifacts themselves and distinguish obfuscation from unknowns. For example, one might wonder whether a disclosure’s emphasis on system architecture at the expense of training data, or fine-tuning at the expense of testing and validation, is due to executive decisions or to system characteristics. Like dense privacy disclosures, idiosyncratic technical artifacts put a heavy burden on consumers and users. The lack of standardization may be hindering the realization of these artifacts’ potential effectiveness both to inform stakeholders and to encourage reflection by AI actors. Many commenters agree that datasheets, system cards, and model cards have an important place in the AI accountability ecosystem.112 At the same time, a number also expressed reservations about their current effectiveness, especially without further standardization and, possibly, regulatory adoption.113

Whatever information is developed for disclosure, how it is disclosed will depend on the intended audience, which might include impacted people and communities, users, experts, developers, and/or regulators.114 The content and form of the disclosure will vary. Some disclosures might be confidential, for example information about large AI training runs provided to the government, especially concerning AI safety and governance.115 Other disclosures might be set out in graphical form that is accessible to a broad audience of users and other affected people, such as a “nutritional label” for AI system features.116 AI nutritional labels, by analogy to nutritional labels for food, present the most important information about a model in a relatively brief, standardized, and comparable form. Specific standards for nutritional label artifacts might specify the content required to be included in such a label. To address the varying levels of detail required for different audiences, disclosures should be designed to provide information for each system at multiple different levels of depth and breadth, “allowing everyone from the general populace to the research level expert to understand it at their own level.”117

Recognizing the shortfalls of unsynchronized disclosures among model developers, commenters largely agreed that standardizing informational artifacts and promoting comparability between them is an important goal in moving toward more effective AI accountability.118 Several commenters called for governmental involvement in the development of these standards.119 For example, the EU AI Act will require regulated entities – principally developers – to disclose (to regulators and the public) information about high-risk AI systems and authorize the European Commission to develop common specifications if needed.120 Proposed required documentation or disclosures would include information about the data sources used for training, system architecture and general logic, classification choices, the relevance of different parameters, validation and testing procedures, and performance capabilities and limitations.121

The federal government could also facilitate access to disclosures as it has in other contexts, such as the SEC’s Electronic Data Gathering Analysis and Retrieval (EDGAR) platform or the FDA’s Adverse Events Reporting System (FAERS) platform. To the extent that NIST and others are engaged in developing voluntary transparency best practices, this is a critical first step to standardization and possible regulatory development.

91 PWC Comment at A8. See also id. at 13 (“Standardized reporting — including references to the agreed trustworthy AI framework, elucidation of the evaluation criteria, and articulation of findings — would help engender public trust.”); Ernst & Young Comment at 10 (“Standardized reporting should be considered where practical”); Greenlining Institute (GLI) at 3 (“AI accountability mechanisms could look like requiring risk assessments in the use of these systems, requiring the disclosure of how decisions are made as part of these systems, and requiring the disclosure of how these systems are tested, validated for accuracy and the key metrics and definitions in those tests - such as how fairness or an adverse decision are defined and shared with regulators and academia.”); CDT Comment at 50 (“The government should take steps that set an expectation of transparency around the development, deployment, and use of AI. In higher-risk settings, such as where algorithmic decision-making determines access to economic opportunity, that may include transparency requirements”).

92 NIST AI RMF at 15-16.

93 Governing AI, supra note 47, at 23.

94 See European Commission, supra note 22.

95 See Evelyn Douek, “Content Moderation as Systems Thinking,” 136 Harv. L. Rev. 526, 572-82 (2022) (discussing platform transparency reports as “transparency theater.”).

96 See Timnit Gebru, et al., Datasheets for Datasets, Communications of the ACM, Vol 64, No. 12, at 86-92 (Dec. 2021). See also Google DeepMind Comment at 24 (referring to “data cards”); Hugging Face Comment at 5 (referring to “Dataset Sheets and Data Statements”); Stoyanovich Comment at 10-11 (referring to the “Datasheet Nutrition Label project”); Centre for Information Policy Leadership Comment at 9 (referring to “data set nutrition labels”).

97 Id., Gebru, et al., at 87.

98 See, e.g., GovAI Comment at 11; Google DeepMind Comment at 24; Bipartisan Policy Center Comment at 7; Centre for Information Policy Leadership Comment at 13; Data & Society Comment at 8.

99 Adapted from Margaret Mitchell, Simone Wu, Andrew Zaldivar, Parker Barnes, Lucy Vasserman, Ben Hutchinson, Elena Spitzer, Inioluwa Deborah Raji, and Timnit Gebru, Model Cards for Model Reporting, FAT* '19: Proceedings of the Conference on Fairness, Accountability, and Transparency, at 220-229 (Jan. 2019).

100 See Nekesha Green et al., System Cards, A New Resource for Understanding How AI Systems Work, Meta AI (Feb. 23, 2022) (“Many machine learning (ML) models are typically part of a larger AI system, a group of ML models, AI and non-AI technologies that work together to achieve specific tasks. Because ML models don’t always work in isolation to produce outcomes, and models may interact differently depending on what systems they’re a part of, model cards — a broadly accepted standard for model documentation — don’t paint a comprehensive picture of what an AI system does. For example, while our image classification models are all designed to predict what’s in a given image, they may be used differently in an integrity system that flags harmful content versus a recommender system used to show people posts they might be interested in.”).

101 OpenAI Comment at 4 (“We believe that in most cases, it is important for these documents to analyze and describe the impacts of a system – rather than focusing solely on the model itself – because a system’s impacts depend in part on factors other than the model, including use case, context, and real world interactions. Likewise, an AI system’s impacts depend on risk mitigations such as use policies, access controls, and monitoring for abuse. We believe it is reasonable for external stakeholders to expect information on these topics, and to have the opportunity to understand our approach.”).

102 Twilio, AI Nutrition Facts.

103 See, e.g., Google, PaLM 2 Technical Report (2023); OpenAI, GPT-4 Technical Report, arXiv (March 2023). See also Andreas Liesenfeld, Alianda Lopez, and Mark Dingemanse, Opening up ChatGPT: Tracking Openness, Transparency, and Accountability in Instruction-Tuned Text Generators, CUI '23: Proceedings of the 5th International Conference on Conversational User Interfaces, at 1-6 (July 2023) (surveying the openness of various AI systems, including the disclosure of preprints and academic papers)..

104 See, e.g., Hugging Face Comment at 5; Anthropic Comment at 4; Stability AI Comment at 12; Google DeepMind Comment at 24.

105 Mitchell et al., supra note 99. Others have begun proposing similar lists of elements that should be included in AI system documentation, including the proposed EU AI Act. See EU AI Act, supra note 21 (Annex IV) (listing categories of information that should be included in technical documentation for high-risk AI systems to be made available to government authorities).

106 See, e.g., Google, supra note 103 (citing Mitchell et al., supra note 99); OpenAI, supra note 103, at 40 (same); Hugo Touvron et al., Llama 2: Open Foundation and Fine-Tuned Chat Models, Meta AI (July 18, 2023), at 77 (same)).

107 Kennerly Comment at 4-5; Google, supra note 103, at 91-93.

108 Meta Research, LLaMA Model Card (March 2023) (“CCNet [67%], C4 [15%], GitHub [4.5%], Wikipedia [4.5%], Books [4.5%], ArXiv [2.5%], Stack Exchange [2%]”).

109 Touvron et al., supra note 106, at 5.

110 OpenAI, supra note 103, at 2; see also The Anti-Defamation League (ADL) Comment at 5 (“Because there is no reporting process that requires regular or comprehensive transparency, we have little information into the decisions made via RLHF and how those decisions could negatively impact the model.”).

111 See, e.g., Hugging Face BigScience Project, BLOOMZ & mT0 Model Card; Hugging Face BigScience Project, xP3 Dataset Card; Niklas Muennighoff, et al., Crosslingual Generalization through Multitask Finetuning, arXiv (May 2023). See also Kennerly Comment at 6.

112 See, e.g., Center for American Progress Comment at 8; Salesforce Comment at 7; Hugging Face Comment at 5; Anthropic Comment at 4.

113 Centre for Information Policy Leadership Comment at 5 (“Absent clear standards for such documentation efforts, organizations may take inconsistent approaches that result in the omission of key information.”); U.C. Berkeley Researchers Comment at 20 (“Current practices of communication, for example releasing long ‘model cards,’ ‘system cards,’ or audit results are incredibly important, but are not serving the needs of users or affected people and communities.”); Data & Society Comment at 8 (practices and frameworks for documentation and disclosure “remain voluntary, scattered, and wholly unsynchronized” without binding regulatory requirements).

114 See, e.g., Hugging Face Comment at 5 (focused on “a model’s prospective user”); Association for Computing Machinery (ACM) Comment at 3 (purpose of artifacts is to “enable experts and trained members of the community to understand [models] and evaluate their impacts”); Mozilla Comments at 11 (model cards and datasheets can “help regulators as a starting point in their investigations”); CDT Comment at 23 (standardization of system cards and datasheets “can make it easier, particularly for users, to understand the information provided”); Google DeepMind Comment at 12, 24 (“Model and data cards can be useful for various stakeholders, including developers, users, and regulators,” and “[w]here appropriate, additional technical information relating to AI system performance should also be provided for expert users and reviewers like consumer protection bodies and regulators”); U.S. Chamber of Commerce Comment at 3 (AI Service Cards should be designed for the “average person” to understand).

115 See Credo AI Comment at 8 (government should consider adopting “[t]ransparency disclosures that should be made available to downstream application developers and to the appropriate regulatory or enforcement body within the U.S. government - not the general public - to ensure they are fit for purpose”); see also First Round White House Voluntary Commitments at 2-3 (documenting commitments by AI developers to “[w]ork toward information sharing among companies and governments regarding trust and safety risks, dangerous or emergent capabilities, and attempts to circumvent safeguards” by “facilitat[ing] the sharing of information on advances in frontier capabilities and emerging risks and threats”)..

116 See, e.g., Global Partners Digital Comment at 15; Salesforce Comment at 7; Stoyanovich Comment at 5, 10-11; Bipartisan Policy Center Comment at 7; Kennerly Comment at 2. C.f. 21 C.F.R. § 101.9(d) (imposing standards for nutritional labels in food).

117 ACT-IAC Comment at 14; see also Certification Working Group Comment at 17 (advocating for “two separate communications systems,” including both “full AI accountability ‘products’” and “a thoughtful summary format”); Google DeepMind Comment at 12 (“Where appropriate, additional technical information relating to AI system performance should also be provided for expert users and reviewers like consumer protection bodies and regulators.”).

118 See, e.g., Centre for Information Policy Leadership Comment at 5; CDT Comment at 23; Global Partners Digital Comment at 15; Stoyanovich Comment at 5.

119 See, e.g., Data & Society Comment at 8; Bipartisan Policy Center Comment at 7.

120 See EU AI Act , supra note 21, Articles 40-41 (authorizing the Commission to adopt common specifications to address AI system provider obligations).

121 See id., Articles 10 (data and data governance), 11 (technical documentation), 13 (transparency and provision of information to users), and Annex IV (setting minimum standards for technical documentation under Article 11). See also European Parliament, Amendments adopted by the European Parliament on 14 June 2023 on the Artificial Intelligence Act and amending certain Union legislative acts(June 14, 2023), including additional disclosure requirements for foundation model providers under Article 28b, including a requirement to “document and make publicly available a sufficiently detailed summary of the use of training data protected under copyright law.” (Article 28(b)(4)(c).