AI System Documentation

Documentation is a critical input to transparency and evaluation, whether internal or external, voluntary or required. Many commenters thought that AI developers should (and possibly should be required to) maintain documentation concerning model design choices, design of system controls, training data composition and pre-training, data the system uses in its operational state, and testing results and recalibrations for different system versions.161 Such documentation, which may be subject to intellectual property protections, informs consideration of appropriate deployment contexts. It helps answer questions about whose interests were considered in AI system development and how AI actors balanced various trustworthy AI attributes. Documentation is also important for AI actors themselves in making them more reflective about impacts, for example about discriminatory system outputs. With respect to discrimination, “tracing the decision making of the human developers, understanding the source of the bias in the model, and reviewing the data” can help to identify and remedy bias.162

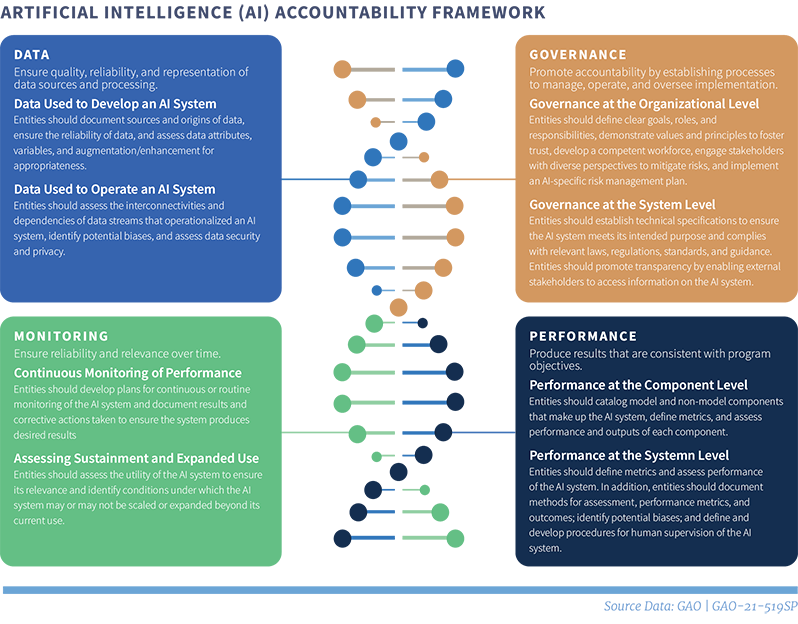

The United States Government Accountability Office (GAO) produced an AI Accountability Framework, making recommendations about both documentation and evaluations for federal agencies; this guidance could also serve other AI actors.163 Without going into detail on the GAO Framework, it is worth noting that documentation practices figure prominently in the guidance, including documentation on training data provenance and preparation, model performance metrics and testing, key design choices, updates, and change logs, among other things.

Commenters thought that requirements to provide information about a system should be “standard” for any AI offering.164 Per one commenter, deployers should record “what was deployed, what changes were made between development and deployment, and any issues encountered during deployment… [and should keep] incident response investigation and mitigation procedures.”165 Another commenter proposed supply chain documentation and monitoring for foundation models.166 In general, record-keeping integrated into evaluation is the basis for “end to end” accountability.167

Appropriate documentation will vary by type of system. For generative AI, additional documentation may be important particularly to elucidate how training data subject to intellectual property rights figures into system outputs.168 More stringent documentation is also useful for information integrity purposes. For example, maintaining documentation of inputs and outputs to the AI system can improve accountability for scientific communication and “be placed into a chain of evidence” as necessary for reproducible results.169

In addition to documentation creation, there is the question of retention. Retention requirements for financial records imposed by the SEC and IRS are useful referents.170 In general, we agree that documentation concerning the development and deployment of AI “should be retained for as long as the AI system is in development, while it is in deployment, and an additional” number of years after.171

161 See, e.g., PWC Comment at A9 (documentation required for auditing include: “Information about the organization’s governance structure and broader control environment…; Description of the development process, algorithm, architecture, and configuration of the model, as well as the design of controls in each respective aspect of the system; Data used to train the system and consumed by the system in its operational state; Documentation of any pre-processing steps applied to the training data; Documentation of the system’s compliance with legal, regulatory, and ethical specifications; Results of testing performed throughout the development process and during the subject period; Design and results of any recalibration performed during the period; Information about the design of controls to detect emergent properties and bugs”); Audit AI Comment at 9 (“The minimum amount kept for any particular model / application pairing should be the amount necessary to retrain the model - this includes the dataset, architecture, hyperparameters, initialization, training schedule, randomization seed, and any other relevant information.”); American Association of Independent Music et al. Comment at 5 (“Proper record-keeping should also include documentation about (i) the articulated purpose of the AI model itself and its intended outputs, (ii) the AI system’s overall system functioning, (iii) the individual or organization responsible for the AI system (including who is responsible for the ingesting materials, who is responsible for any foundational AI model, who is responsible for any fine tuning of the AI model, who is deploying the AI system, etc.), (iv) risk assessments concerning the potential misuse and abuse of such a model, and (v) what parameters and processes were used, and what decisions were made, during the AI system development and deployment.”).

162 Accenture Comment at 4.

163 GAO, supra note 3.

164 See, e.g., CDT Comment at 24 (“Accountability …requires disclosure of information such as how a system was trained and on what data sets, its intended uses, how it works and is structured, and other information that permits the intended audiences (which can include affected individuals, policymakers, researchers, and others) to understand how and why the system makes particular decisions.”); IBM Comment at 4.

165 Protofect Comment at 7.

166 Stanford Institute for Human-Centered AI Center for Research on Foundation Models Comment at 4-5 (noting that, “as a direct analogy to” the Software Bill of Materials, “the federal government should track the assets and supply chain in the foundation model ecosystem to understand market structure, address supply chain risk, and promote resiliency,” and that “[a]s an example implementation, Stanford’s Ecosystem Graphs currently documents the foundation model ecosystem, supporting a variety of downstream policy use cases and scientific analyses”).

167 See, e.g., Ada Lovelace Institute Comment at 6. (“Accountability practices must occur throughout the lifecycle of an AI system, from early ideation and problem formulation to post-deployment. For example, you might layer a [data protection impact assessment] or a datasheet at the design phase, an internal audit at testing, and an audit by a third-party at (re)deployment.”). See also Resolution Economics Comment at 3 (AI systems should be audited every time its algorithm receives a major update).

168 See, e.g., CCC Comment at 2-3; Copyright Alliance Comment at 6 and 6 n.9 (discussing importance of records on training data for copyright forensics and audits).

169 STM Comment at 2 (“[W]hen applying AI in the context of scholarly communications, a record of inputs and outputs to the AI system should be maintained to ensure that the AI system and its outputs can be placed into a chain of evidence and results can be more easily reproduced, including

references to scholarly works that have been used.”). See also CCC Comment at 4 (“Without verifiable and auditable tracking of inputs, it is impossible to ensure that the resulting outputs are reliable.”).

170 PWC Comment at A10 (suggesting record “retention requirements of the SEC and IRS may be an appropriate starting point” for AI).

171 See, e.g., DLA Piper Comment at 24 (recommending “three years once a system is no longer in active use or development to maintain audit trails and institutional knowledge”); American Association of Independent Music et al. Comment at 5 (to “at least seven years following [an AI system’s] discontinuance[.]”).